Which cooling system is best for your data centre?

Data centres are growing bigger, more diverse and need to cope with increasingly complex demands as their importance to global IT infrastructure increases. On top of this, data centre operators are facing increased pressure to reduce the energy consumed by these facilities, as climate change and urban development demands they reduce their footprints.

More than ever, efficient and effective cooling systems are becoming the difference between a modern data centre and a dinosaur vulnerable to disruption.

Back in the old days (yes, the early 2000s count as the old days now), data centre cooling was simply thought of as a sunk cost. Companies shelled out whatever money was necessary to run huge air conditioning (AC) units to get their server rooms as cool as possible. Most of these facilities would operate at a PUE (power usage effectiveness) of as high as 3 (seriously, running six space heaters in your house is more efficient).

Over the last decade or so, however, increased attention has been paid to cooling data centres efficiently. It makes sense, since maintaining a suitable environmental temperature in a data centre facility can account for as much as 40% of its total power demands.

According to the American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE), the acceptable operating temperature range for a data centre is between 64° and 81°F (18° and 27°C). However, many data centres run much colder than this to minimise the risk of malfunctions and overheating, which are a major cause of these facilities draining so much power.

From hot aisle containment and evaporated cooling to liquid and free cooling, there are several promising new technologies taking hold across the industry right now, alongside ongoing efforts to improve older techniques. We’ve taken the time to break down some of the advantages and disadvantages of these methods.

CRAC

Standing for Computer Room Air Conditioner, CRAC is an evolution of the traditional method of cooling a server room. CRAC works by extracting hot air and pumping in cold, in roughly the same way that a home AC unit keeps your house cool, while also monitoring and controlling the air distribution and humidity in a server room. (You wouldn’t think it, but an ideal humidity for a data centre server is actually somewhere between 20% and 80%, which cuts down on electrostatic discharge).

CRAC cooling systems pump cool air through perforated floor tiles between the racks, the computers and racks intake the cool air and exhaust hot air into the opposing hot aisle. Computer room air conditioning units on the floor then pull in the hot air exhausted into the hot aisles, and release it underneath the floor tiles, completing the cycle, according to a report by DPS Telecom.

This means that a CRAC unit air handler can keep cool air running through the system and help keep a steady airflow through the environment.

Hot Aisle Containment

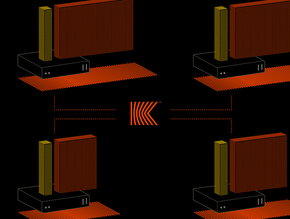

Mentioned briefly above, hot aisle containment is a method of orienting server racks to that has gained a lot of popularity in combination with other cooling methods for its low-cost, high-return functionality. In essence, the method separates the areas of hot and cold air, allowing operators to strategically place CRAC units and only cool certain areas rather than the whole server room.

In-Rack Heat Extraction

This is the first of the seriously modern designs that, elevates the concept behind hot aisle containment: you don’t have to cool a whole room to cool a rack of servers. In-rack heat extraction directly extracts the heat generated by servers, pumping it outside and cooling the hardware using compressors and chillers inside the rack itself. The major drawback to this method is that it limits computational density per rack, which limits the amount of power you can fit on a single floor.

Evaporative Cooling

Another method that has become increasingly popular in recent years takes advantage of the reduction in temperature resulting from the evaporation of water into air. “The principle underlying evaporative cooling is the fact that water must have heat applied to it to change from a liquid to a vapor. When evaporation occurs, this heat is taken from the water that remains in the liquid state, resulting in a cooler liquid,” notes a report by the Baltimore Aircoil Company.

Liquid Immersion Cooling

One of the most modern and potentially exciting forms of modern data centre cooling is liquid immersion cooling. Water cooled data centre racks have fantastic power efficiency, but the threat of damage from any water at all coming into direct contact with several hundred thousand dollars worth of computing equipment has prevented the technology from seeing much adoption.

Liquid immersion cooling uses a dielectric coolant fluid rather than water to gather the heat from server components. Dielectric fluid, unlike water, can can come into direct contact with electrical components (like CPU’s, drives, memory, etc.) without causing damage.

Liquid coolant running through the hot components of a server and taking the heat away to a heat exchanger is hundreds of times more efficient than using massive CRAC (Computer Room Air Conditioning) devices to do the same, and the technology has produced some staggeringly low PUE readings.